No edit summary Tag: 2017 source edit |

No edit summary |

||

| Line 1: | Line 1: | ||

== What is the difference between the AI Editing Assistant and the Chatbot? == | |||

The [[Manual:Extension/AIEditingAssistant|AI Editing Assistant]] integrates an LLM (with "world knowledge," without "expertise" from the wiki) into the editing mode, allowing the content of a page to be edited directly. The Chatbot integration makes the expertise from the wiki available to a chatbot (RAG data export) and is primarily used to find and use existing information. | |||

== How does the integrated Chatbot work? == | == How does the integrated Chatbot work? == | ||

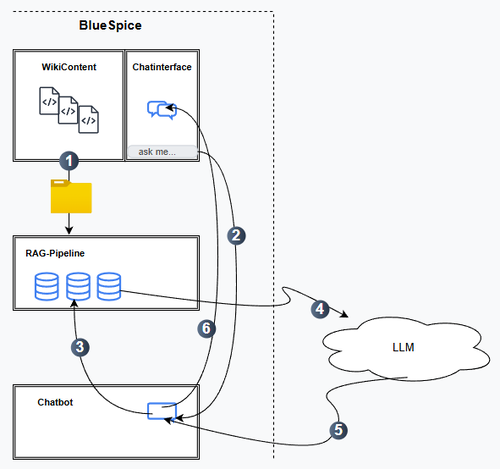

[[File:Integrated wiki chatbot.png|alt=Diagram of necessary components|thumb|500x500px|Chatbot system]] | [[File:Integrated wiki chatbot.png|alt=Diagram of necessary components|thumb|500x500px|Chatbot system]] | ||

| Line 11: | Line 14: | ||

== What are the LLM requirements? == | == What are the LLM requirements? == | ||

* BlueSpice does not provide the LLM that is a prerequisite for the chatbot feature. The LLM must be provided by the customer and the | * BlueSpice does not provide the LLM that is a prerequisite for the chatbot feature. The LLM must be provided by the customer and the wiki must be able to communicate with it by exchanging API keys. | ||

* Currently, the following LLMs are supported: | * Currently, the following LLMs are supported: | ||

** ''OpenAI'' and ''OpenAI''-compatible systems (including, for example, ''Microsoft Azure'', ''DeepSeek'', ''Google Gemini'') | ** ''OpenAI'' and ''OpenAI''-compatible systems (including, for example, ''Microsoft Azure'', ''DeepSeek'', ''Google Gemini'') | ||

** ''Ollama, Mistral AI'' (open source, e.g., via IONOS) | ** ''Ollama, Mistral AI'' (open source, e.g., via IONOS) | ||

* Cloud hosting requirement: Internet access for the LLM is required (common encryption). | * Cloud hosting requirement: Internet access for the LLM is required (common encryption). | ||

=== RAG pipeline === | |||

== How does the RAG pipeline work? == | |||

* The wiki's content must be processed in a RAG pipeline so that the LLM can learn from it. | |||

* Functions: | |||

** Retrieval: Relevant information related to a user query is retrieved from the wiki (= document collections) and provided in context. | |||

** Generation: The LLM uses the retrieved information as context to formulate a content-based answer. | |||

* Integrated RAG pipeline (extension WikiRAG): | |||

** Prepares the raw data for further processing in the LLM. | |||

** The RAG pipeline also handles access control (ACL) and metadata management. | |||

* Customers can operate their own RAG pipeline. An external RAG pipeline must independently manage ACL and metadata management. BlueSpice only provides the raw data. | |||

* Limitations in the cloud: A dedicated RAG pipeline has to be accessible via the internet. However, this is not unprotected public access; rather, access is achieved using common security and encryption mechanisms. | |||

== Can some wiki content be excluded as data source? == | |||

Yes, | |||

* the user namespace and non-content namespaces are fundamentally excluded from output in the chatbot. | |||

* there is a [[Manual:Extension/ChatBot#Excluding pages as a data source|behavior switch in the page options]] that specifies whether a page should be indexed for the chatbot or not. | |||

Revision as of 11:48, 7 October 2025

What is the difference between the AI Editing Assistant and the Chatbot?[edit | edit source]

The AI Editing Assistant integrates an LLM (with "world knowledge," without "expertise" from the wiki) into the editing mode, allowing the content of a page to be edited directly. The Chatbot integration makes the expertise from the wiki available to a chatbot (RAG data export) and is primarily used to find and use existing information.

How does the integrated Chatbot work?[edit | edit source]

- Provision of raw data from the wiki for processing in the RAG pipeline.

- Request to the chatbot via the chat interface.

- Transfer of the request to the RAG pipeline. There, the data is processed accordingly.

- Transfer to the LLM. Text generation by the language model.

- Transfer to the chatbot.

- Transfer to the chat interface. Output there.

What are the LLM requirements?[edit | edit source]

- BlueSpice does not provide the LLM that is a prerequisite for the chatbot feature. The LLM must be provided by the customer and the wiki must be able to communicate with it by exchanging API keys.

- Currently, the following LLMs are supported:

- OpenAI and OpenAI-compatible systems (including, for example, Microsoft Azure, DeepSeek, Google Gemini)

- Ollama, Mistral AI (open source, e.g., via IONOS)

- Cloud hosting requirement: Internet access for the LLM is required (common encryption).

How does the RAG pipeline work?[edit | edit source]

- The wiki's content must be processed in a RAG pipeline so that the LLM can learn from it.

- Functions:

- Retrieval: Relevant information related to a user query is retrieved from the wiki (= document collections) and provided in context.

- Generation: The LLM uses the retrieved information as context to formulate a content-based answer.

- Integrated RAG pipeline (extension WikiRAG):

- Prepares the raw data for further processing in the LLM.

- The RAG pipeline also handles access control (ACL) and metadata management.

- Customers can operate their own RAG pipeline. An external RAG pipeline must independently manage ACL and metadata management. BlueSpice only provides the raw data.

- Limitations in the cloud: A dedicated RAG pipeline has to be accessible via the internet. However, this is not unprotected public access; rather, access is achieved using common security and encryption mechanisms.

Can some wiki content be excluded as data source?[edit | edit source]

Yes,

- the user namespace and non-content namespaces are fundamentally excluded from output in the chatbot.

- there is a behavior switch in the page options that specifies whether a page should be indexed for the chatbot or not.