Created page with "{{CollapsibleWrapper}}" |

No edit summary Tag: 2017 source edit |

||

| Line 1: | Line 1: | ||

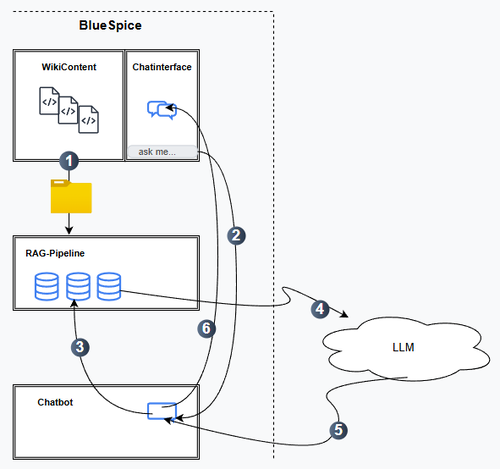

{{ | == How does the integrated Chatbot work? == | ||

[[File:Integrated wiki chatbot.png|alt=Diagram of necessary components|thumb|500x500px|Chatbot system]] | |||

* Provision of raw data from the wiki for processing in the RAG pipeline. | |||

* Request to the chatbot via the chat interface. | |||

* Transfer of the request to the RAG pipeline. There, the data is processed accordingly. | |||

* Transfer to the LLM. Text generation by the language model. | |||

* Transfer to the chatbot. | |||

* Transfer to the chat interface. Output there. | |||

{{Clear}} | |||

== What are the LLM requirements? == | |||

* BlueSpice does not provide the LLM that is a prerequisite for the chatbot feature. The LLM must be provided by the customer and the wikimust be able to communicate with it by exchanging API keys. | |||

* Currently, the following LLMs are supported: | |||

** ''OpenAI'' and ''OpenAI''-compatible systems (including, for example, ''Microsoft Azure'', ''DeepSeek'', ''Google Gemini'') | |||

** ''Ollama, Mistral AI'' (open source, e.g., via IONOS) | |||

* Cloud hosting requirement: Internet access for the LLM is required (common encryption). | |||

=== RAG pipeline === | |||

Revision as of 11:18, 7 October 2025

How does the integrated Chatbot work?[edit | edit source]

- Provision of raw data from the wiki for processing in the RAG pipeline.

- Request to the chatbot via the chat interface.

- Transfer of the request to the RAG pipeline. There, the data is processed accordingly.

- Transfer to the LLM. Text generation by the language model.

- Transfer to the chatbot.

- Transfer to the chat interface. Output there.

What are the LLM requirements?[edit | edit source]

- BlueSpice does not provide the LLM that is a prerequisite for the chatbot feature. The LLM must be provided by the customer and the wikimust be able to communicate with it by exchanging API keys.

- Currently, the following LLMs are supported:

- OpenAI and OpenAI-compatible systems (including, for example, Microsoft Azure, DeepSeek, Google Gemini)

- Ollama, Mistral AI (open source, e.g., via IONOS)

- Cloud hosting requirement: Internet access for the LLM is required (common encryption).